Artificial intelligence could revolutionize political campaigns, advertising

Potential for benefits as well as deceptive techniques and damage are all possible with generative AI

Artificial intelligence has permitted what has become known as “deepfakes,” which are inauthentic depictions of people who are usually famous, saying or doing things that are unusual and attention-drawing.

CBS News recently reported on celebrities seeking to have their likenesses protected by copyright law so that they aren’t victims of this. The potential for misleading and outright false ads is also expected in the political realm.

Recently, two University of North Carolina researchers released a paper that discussed that aspect and the types of oversight that have been attempted thus far. Scott Babweh Brennen co-authored The New Political Ad Machine: Policy Frameworks for Political Ads in an Age of AI, which is the paper I’m referencing. I spent a half-hour speaking to him about the topic.

“I don't think there's necessarily a reason to think that's all bad,” Brennen said. “I think there's definitely a reason to think that it could just make it easier for smaller advertisers, smaller campaigns to make higher quality ads.”

But there’s also a potential risk.

“At the same time, then there's the other sort of side of things, which is what gets far more attention, which is the concern about deceptive content,” Brennen said.

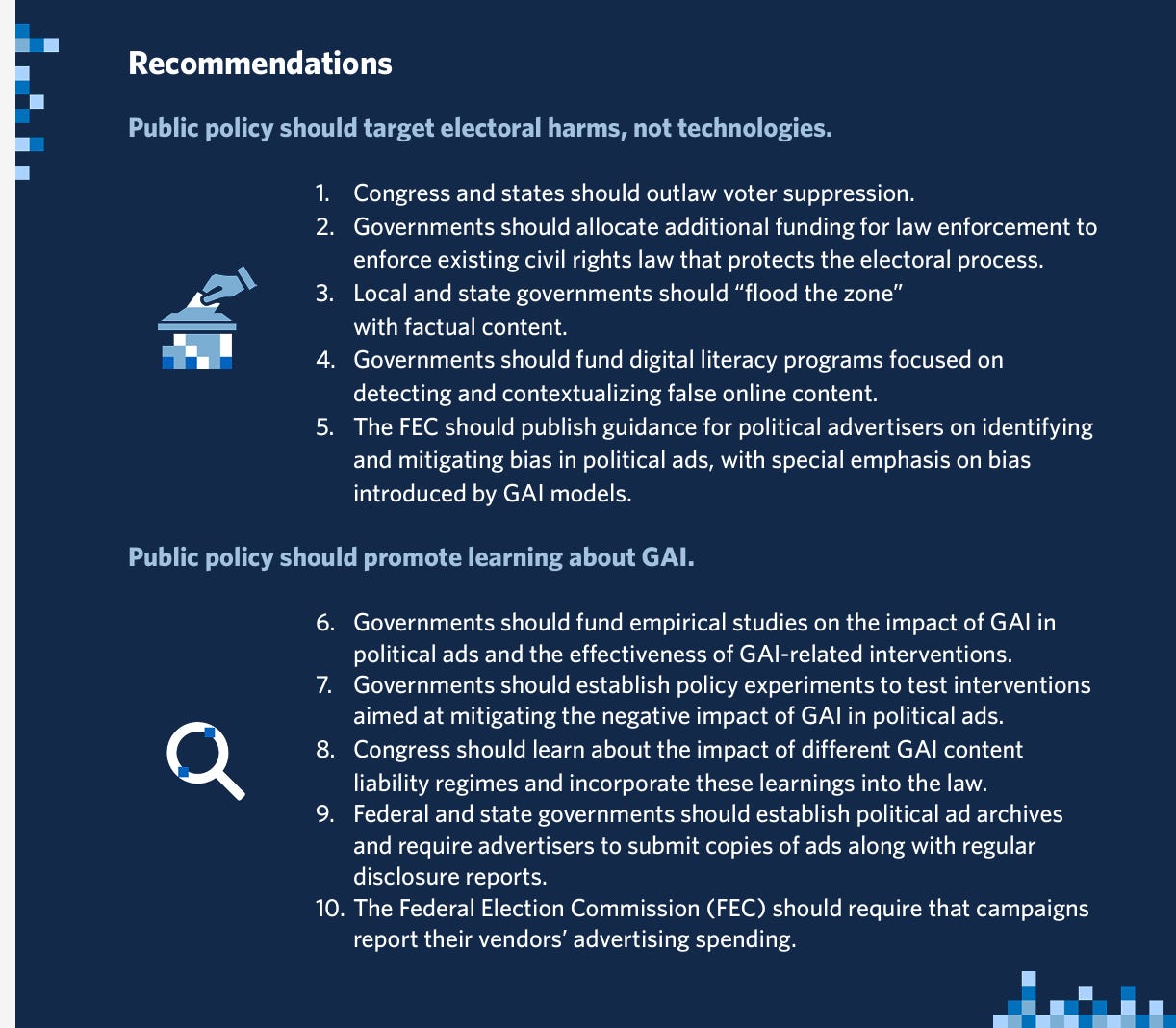

According to the report, policymakers have quickly introduced proposals to address these concerns. Most proposals have focused on three interventions: watermarks on all GAI content, disclaimers on political ads containing GAI content, and bans on deceptive GAI content in political ads.

Brennen and his co-author Matt Perault issued a series of recommendations posted below.

Following the release of OpenAI’s ChatGPT in November 2022, public attention on GAI skyrocketed. As the 2023 election approached, policymakers began to examine the potential impact of GAI in elections, and their attention has already turned to the likely increase in GAI during the 2024 primary and general elections. The Senate recently held a hearing on AI and elections, and Sen. Chuck Schumer spoke.

“If we don’t act, we could soon live in a world where political campaigns regularly deploy totally fabricated but also totally believable images and footage of Democratic or Republican candidates distorting their statements and greatly harming their election chances,” Schumer said.

At the state level, both Republican and Democratic lawmakers have begun to examine what regulations would make sense. In Florida, Republican State Sen. Nick DiCeglie introduced legislation requiring disclaimers for political ads made with generative AI.

Public Citizen, a nonprofit consumer advocacy organization, reports that California, Michigan, Minnesota, Texas, and Washington have already enacted legislation to regulate AI-generated images, audio, or video. They are the only states to do so.

Tech companies have likewise begun to regulate AI-based ads. Meta, the parent company of Facebook, Threads, and Instagram, banned such marketing that would be created with its own AI service. Those that are made with other services require disclaimers.

Brennen said that the generative AI could have a more significant impact on smaller, down-ballot races. He’s skeptical of how persuasive they could be on the undecided voter, but they could further polarize and entrench biases.

Brennen cautioned against having a dystopian view, however. Misleading ads have always been possible with the use of Photoshop.

“What is really new here?” Brennen said.

On social media, I called for revising existing copyright laws to protect the unions in Hollywood during the strikes they had last year. As a corresponding way of addressing it, I wrote on Facebook that Congress should form a commission to study AI's impacts on filmmaking and intellectual property creation. With those experts interviewed, I suggested that lawmakers in Congress write laws that make sense and don’t hamper innovation.

Congress did form a committee to study that, and the results are expected this year. Among the ones I suspect is that actors should be permitted to decree on their deathbed whether they want their likeness to ever be used in future AI creations. If, for instance, someone wanted to use Paul Newman’s historical films to create him speaking, he would have to get permission from his wife, Joanne Woodward, or his family’s estate. If she wanted to state that she didn’t want his or her likeness ever used upon her death, then there could be potential civil and criminal liability for doing so. If an actor however wanted to beqeauth that right to their heirs, they could also stipulate that in their will.

But back to the political realm.

To protect politicians and activists on both sides of the abortion issue, it makes sense to find an agreement on regulating advertising content created with generative AI. The possibility of ads that demonize abortion providers is very real and perilous. But on the other side, it’s also possible to create ads that would show inhumane indifference to women who would be impregnated through rape and incest. Two can play the game of deceptive AI.

It’s not that AI can’t be used to improve elections. People with underfunded campaigns could cut out paying production companies that take much funding to create ads. Then, the campaign expenditures could instead be spent on disseminating the ads to a broader audience. That would somewhat level the playing field, though the same technology would also be available to better-funded campaigns.

These are issues that need to be addressed by lawmakers and those in politics. There needs to be a consensus on what should be permitted and forbidden.

Brennen issued a list of recommendations in his report.

“Regulation should be less concerned about specific technologies and more about the harm that happens,” he said.

The other theme in the recommendations is about increasing our understanding about the harms of this content. And so we have a number of recommendations about helping us learn more about how these tools work, the capabilities and the impact that it has.”